Our precision offers lower prices to an enormous portion of all property insurance policies, while maintaining low loss ratios.

Normally insurance companies sacrifice performance in order to grow. We don’t.

Lower Loss Ratios

Rapid Organic Growth

Better Portfolio Construction

Thank you for your message

We have developed Skynet, the world’s most precise risk technology for property & casualty insurance by going back to the scientific principles that cause insurance claims

Property insurance is simple.

Pricing it properly isn’t.

Skynet – Automated, Scientific Underwriting.

Done live at the point of sale.

In today’s status quo, insurance pricing is founded upon the practice of grouping common risks.

This concept needed to be abolished entirely in favour of ground up analysis based on the scientific properties of the events that cause insurance claims.

Hear More

Hear More

Grouping common risks is an oversimplified method to deal with a very complex problem. However, if you want to underwrite property insurance risk with pinpoint accuracy, it can’t be solved for by just using big data and artificial intelligence either. This isn’t like every other data science problem.

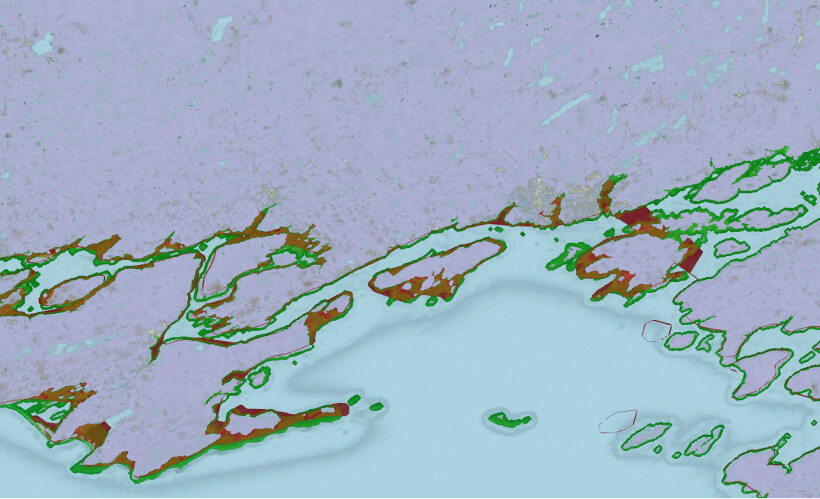

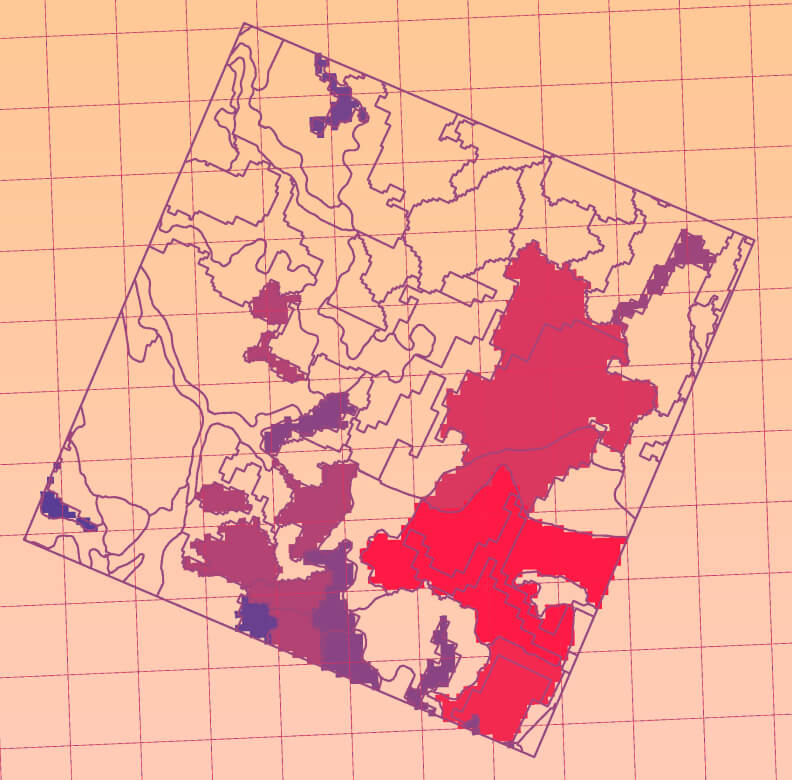

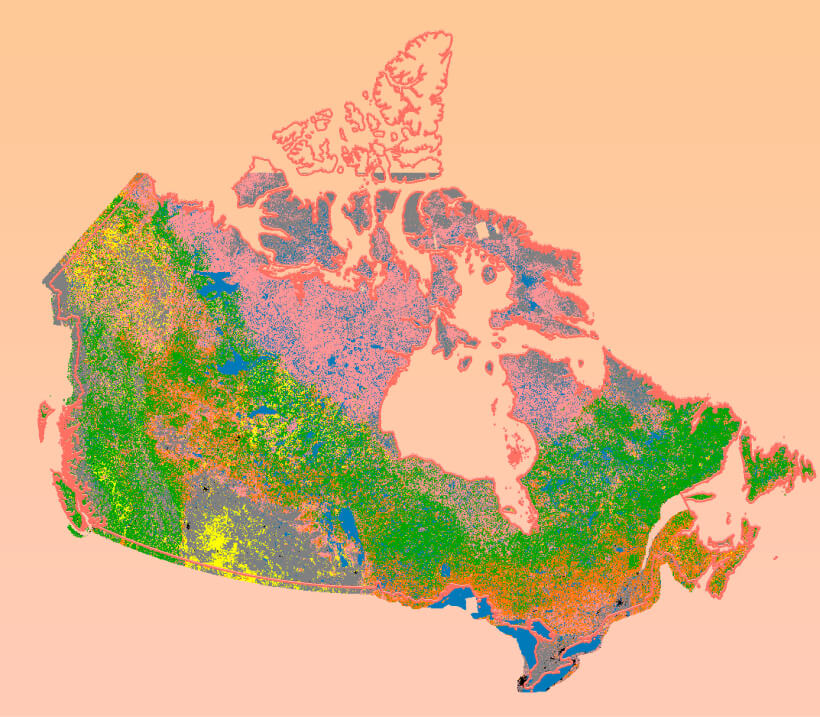

Building centroid based refinement of a regular 1km grid

Lake surge exposures for Lake Ontario, Canada

The risks in property insurance are driven by natural hazards (such as weather) which are capable of producing loss events that are far outside the parameters of any actual event in recorded history. For this reason, physics-based frameworks capturing the underlying dynamics are necessary to model the impact of all plausible events.

As such, underwriting property risk requires a brand new approach. That’s what we’ve built.

Here are the principles we followed:

This isn’t a novel concept, but following it strictly is crucial to our approach. It is also something preached but rarely practiced properly in today’s industry.

The evaluation of risk of flood losses should be independent from that for house fires. Which should be completely separate from earthquakes. And so on.

Pricing starts with data about the thing being insured.

Collecting this data isn’t unusual in insurance. What is unusual is actually using all of it to determine price.

Normally all this data is used as a filter – companies don’t want to insure anyone under 25. Or anyone with more than 3 claims. Or a house with a wood burning fireplace. Or one located north of a certain latitude.

The industry is missing the point.

Our precision offers lower prices to an enormous portion of all property insurance policies, while maintaining low loss ratios.

Normally insurance companies sacrifice performance in order to grow. We don’t.

Insurance Policies

Through extensive scientific analysis, we found that as much as

of all home insurance policies are materially mispriced

Insurance Market

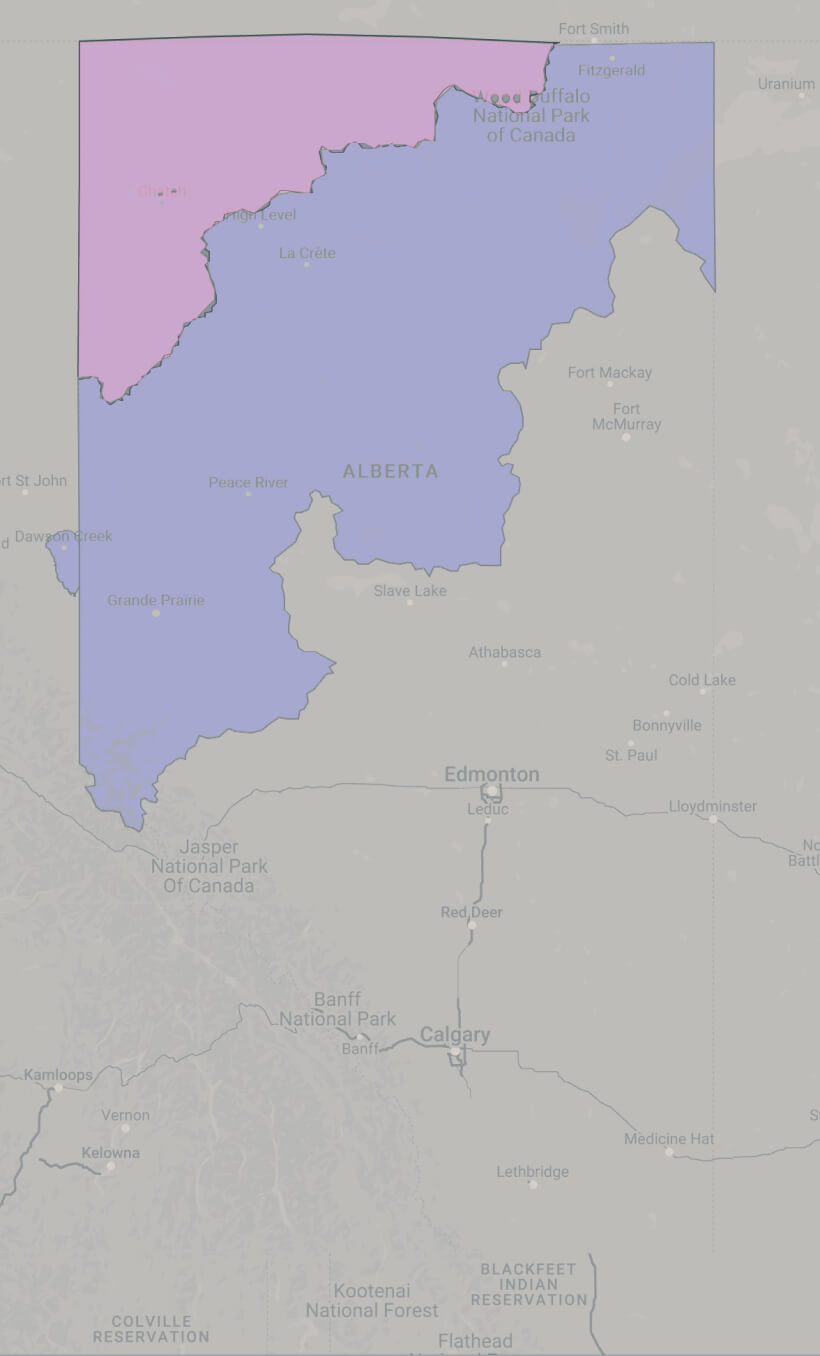

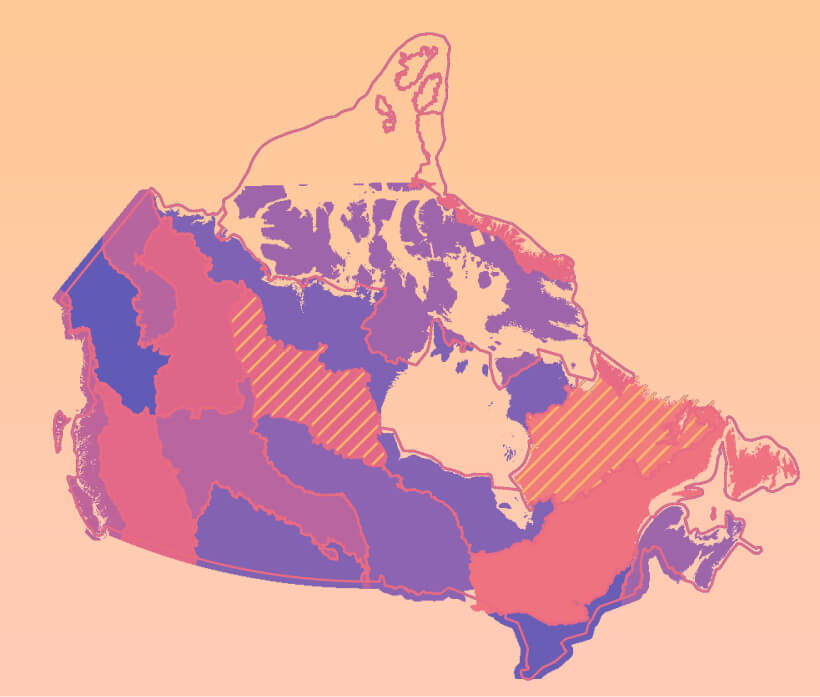

Canadian Homeowner’s Insurance Market

Insurance Market

United States Homeowner’s Insurance Market

Insurance Market

Global Homeowner’s Insurance Market

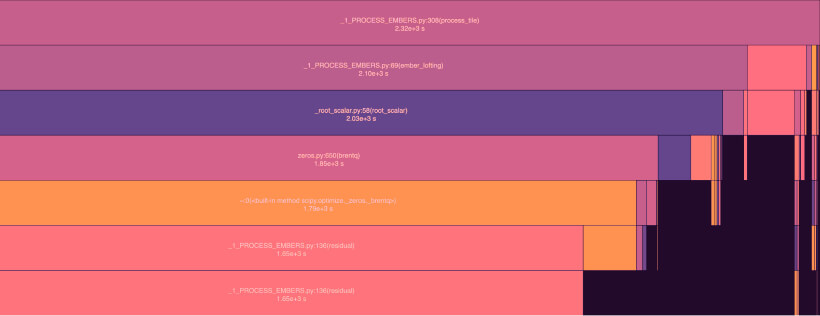

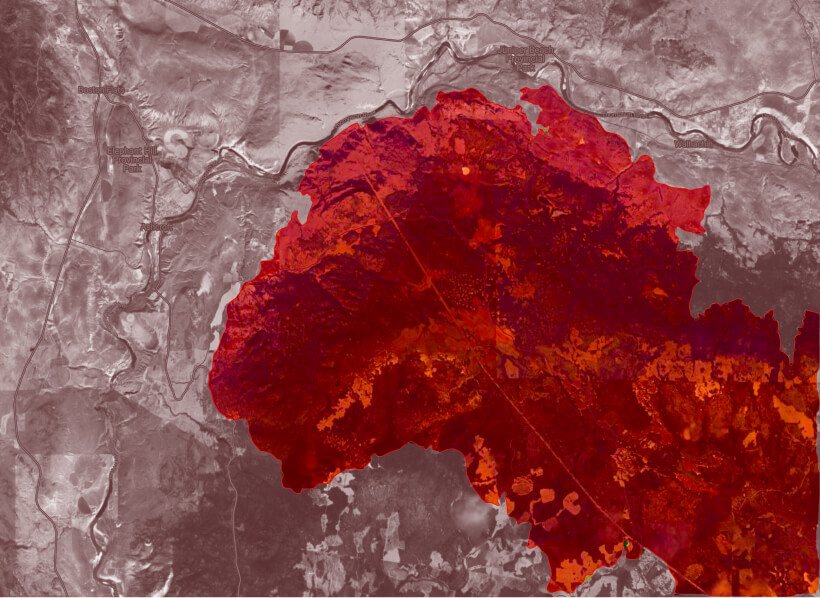

Call stack of an ember lofting routine run on a wildfire in northern Alberta, Canada

Vegetation photoplot locations for Central British Columbia, Canada

It’s hard to analyse the risk of an event that’s never happened.

But black swan events do happen – earthquakes, tsunamis, wildfires etc. – all with devastating impact.

The industry needs to stop pricing for what has happened, and start pricing for what could happen. We call this exposure based pricing.

Insurers have long held the view that catastrophe risk is about risk transfer to the reinsurance market, and that the ultimate responsibility for establishing a proper price for such risks lies in the hands of the reinsurers.

But catastrophic events usually cause 10-50% of all personal property claims, depending on the market.

The risk of those claims happening to a specific home can impact:

Catastrophe risk is an unavoidable element of a property insurer’s business.

In our opinion, primary insurance carriers need to start understanding and pricing for the catastrophe risks inherent in every policy they write, irrespective of whether the risk is being reinsured out.

Flood watch / warning zones for northern Alberta, Canada for Monday, May 9th, 2022

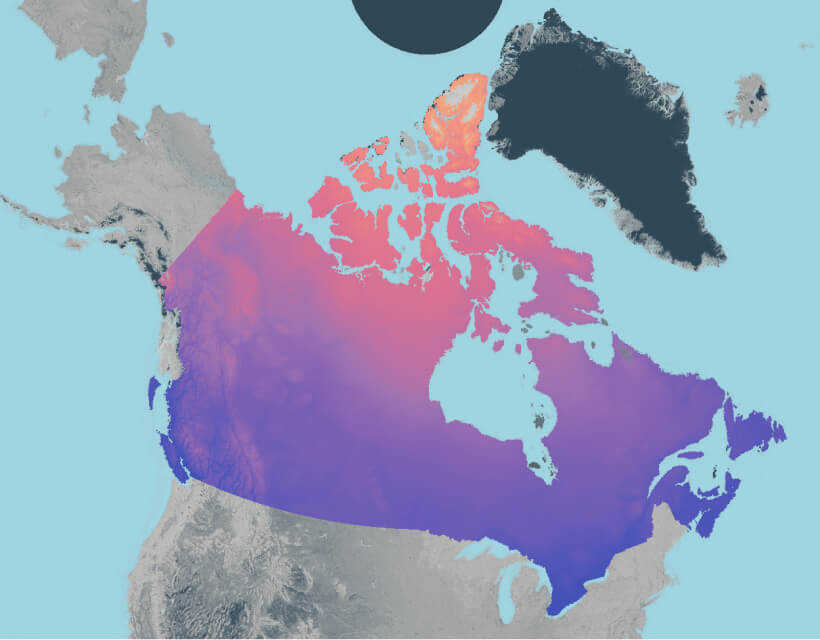

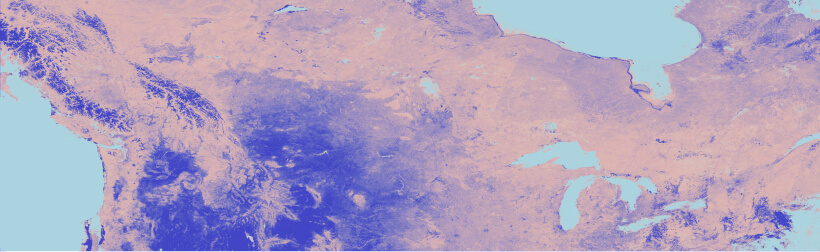

Mean annual temperature at a 300 arcsecond resolution for Canada

The risk of a home burning from an unattended oven is not the same as that from a wildfire.

We isolated pure non-catastrophic risk, and applied extremely selective machine learning algorithms to consider the fine details of each policy.

The purity of our approach, combined with the sophistication of our non-catastrophe models, results in much richer and more precise recognition of the components of risk, meaning we have unprecedented insights into the driving forces that cause claims.

Classic frameworks that use territories combined with classes of risk and discounts, cannot match our capabilities.

To accurately price for a risk, we must be able model the underlying scientific properties of the events that cause claims using full scale stochastic models for all perils.

We do everything the hard way so that we can pick up every detail that might impact the risk:

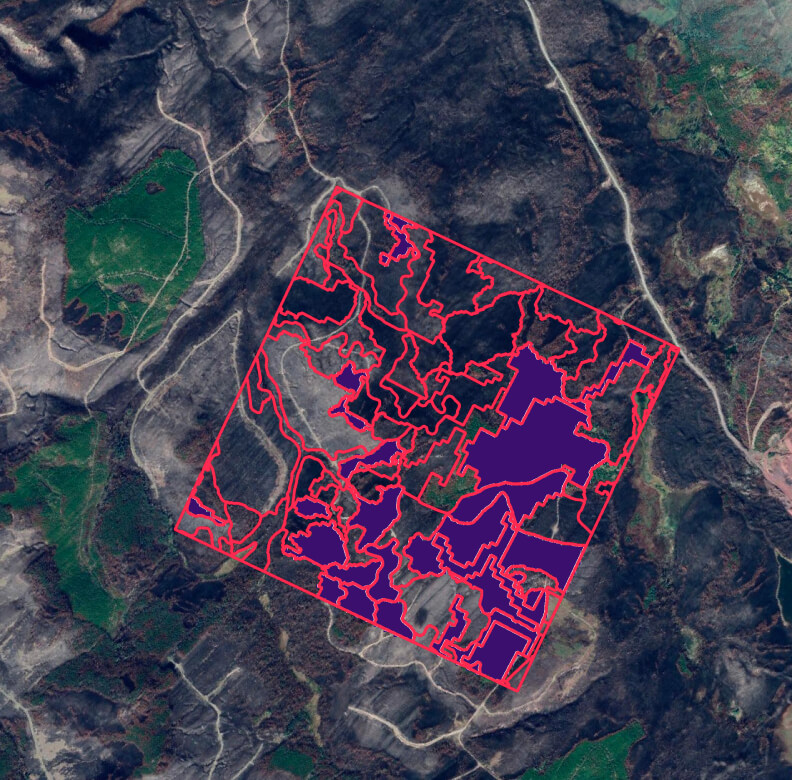

Wildfire burn scar near Ashcroft, British Columbia, Canada from 2021

As noted, primary insurance companies largely act as though it is not their responsibility to properly price for catastrophe risk within a single home insurance policy, since they are not the ones ultimately bearing that risk.

Here are the issues though:

If an insurer writes too many policies that are exposed to a single catastrophic event, and that event happens, they don’t want to be counting on luck to ensure they have enough reinsurance coverage.

An insurer shouldn’t wait until the annual reinsurance renewal to find out if all those policies they wrote in the last year have similar exposures, because if they do, their reinsurance rates will go up unexpectedly, deteriorating returns.

Most insurers check their aggregations once a year when they do their reinsurance renewals. But this is a reactive approach. To facilitate a rapidly growing portfolio and control for aggregation risk, insurers must have an immediate and precise understanding of the accumulation of their risks to individual loss events.

As such we:

Multi-day composite of NASA MODIS data at 250 metre resolution

Personal property policies are the most highly commoditized insurance products that a consumer can buy. Convenience cannot be sacrificed for precision.

Legacy rating methods show a price instantaneously due to their simplistic nature. We cannot fail to deliver that same experience.

We needed to pack billions of calculations into 2 seconds to keep the same customer experience.

Furthermore, there are no shortcuts. You can’t pre-run models. Policyholders often update their risk data at the time of quote, and small changes in data can yield large impacts on risk, and therefore price.

on our team works

in Scientific Research

& Engineering Roles

of extensive research data

is used to support our

proprietary pricing approach

of loss event simulations, at minimum, are run for each and every peril to price a single insurance policy

The layers of modelling, computational breakthroughs, integration of a vast array of pertinent data and proper risk packaging make this an extraordinarily complex problem, which even the best data scientists are unequipped to attack.

Here’s how we did it:

Rasterized version of a vegetation photo plot used in wildfire fuel mapping

Wildfire Fuel Map for Canada

This was our vision. We were the ones who had to execute on it. We did not want compromises.

We wrote hundreds of thousands of lines of code.

We compiled one of the industry’s largest research datasets.

We hired a team and developed a culture that was dedicated to only the purest & most sophisticated risk analysis.

If you want something done right, you have to do it yourself.

At times, we honestly questioned whether our approach to analyzing risk was technically feasible.

Standard catastrophe models can take at least 15 minutes to run. We needed models that could run in 2 seconds.

We had to analyze the risk on a single home. Existing science is not built for this level of precision.

We needed to analyze every peril including wildfire. Wildfire had never been modelled before in such detail for the applications we required.

Again and again, we hit roadblocks where the existing weather science, data science and computer science were not advanced enough to support the no compromises approach we’d envisioned.

But one by one, we engineered ourselves over those roadblocks in the pursuit of the purest risk analysis.

Color-coded map of the terrestrial ecozones of Canada

Total burn footprints of the Lytton Creek fire (left, $65M in property damage) and the White Rock Lake fire (right, $60M in property damage) which occurred in British Columbia, Canada in July & August 2021.

The components of our architecture were meticulously selected to optimize the performance, robustness and longevity of the system. Even if it meant being unconventional.

There were tens of thousands of considerations along the way.

These are just a few:

Skynet – Automated, Scientific Underwriting. Done live at the point of sale.

The last decade has procured all the data & computational power necessary to enable a quantum leap in precision risk analysis. To harness these resources, the insurance industry requires a fundamental shift in the methods it uses to price for and manage risk.

We have re-engineered the entire pricing & risk evaluation process for property & casualty insurance.

In less than 2 seconds, live at the point of sale for a single insurance policy, we run:

by offering lower risk-adjusted prices to an enormous array of policyholders.

for a portfolio of risks, even while growing.

meaning improved resilience to catastrophic events & reinsurance optimization.

since the complexities of human centric underwriting now live in the computations of Skynet.

However - All this technical capability & all these opportunities are wasted if someone doesn’t deploy the technology properly.

But here’s the thing...